Today marks a turning point in holding tech companies accountable. The European Parliament is voting to endorse the Digital Services Act - the most sweeping rules to date for regulating tech companies anywhere in the world.

KENZO TRIBOUILLARD/AFP via Getty Images

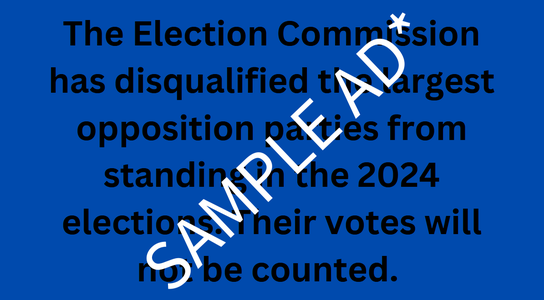

The DSA navigates a thorny line between protecting people’s free speech online and tackling illegal content, rights abuses and social harms - including growing issues such as viral disinformation and electoral interference. It finally offers a way to systematically lift the lid on the inner workings of the biggest tech companies that play an increasingly dominant role in how we experience the world.

But the process for getting here was fraught with tension: an intense 2+ years of negotiations between EU institutions, aggressive lobbying by the most well resourced industry representatives in Brussels, and the growth of a diverse movement of activists to put people ahead of profit. So ultimately, what was achieved?

The Good

Limits on surveillance advertising

Going beyond existing data protection rules, the DSA bans online platforms from using minors’ and sensitive data to serve users with targeted ads. As our polling has shown, sensitive data is amongst the most controversial information used to target people with ads, such as records about your health, race, ethnicity, and political or religious beliefs.

With some caveats (see below), the DSA effectively bans the controversial practice of political microtargeting in Europe. This issue was a major civil society and cross-party MEP campaign priority, and while it falls short of a more comprehensive ban, this presents an important incremental step to calling time on Big Tech’s surveillance business model.

Due diligence for big platforms

For the first time very large online platforms (defined as those with at least 45 million users) must conduct periodic risk assessments of how they deal with illegal as well as legal but harmful content, and also mitigate those risks. There was much at stake here to ensure the risk assessments cover all fundamental rights and can act as a meaningful check on new threats as they emerge. Big platforms will also be subject to independent annual audits to verify how they fulfill their requirements.

Public scrutiny via data access

Public interest researchers will be able to apply for access to platform data as another layer of independent scrutiny of how big online platforms work and their impact in the world. Crucially, campaigners were able to secure language specifying that this includes vetted civil society organisations, not just academic researchers, which would have vastly limited its scope. Recent trends have shown how platforms can intimidate researchers and cut off access to tools which enable limited scrutiny of how they work (not least the anticipated shutting down of CrowdTangle by Meta). This provision offers an opportunity to safeguard and grow this vital research.

Oversight of big platforms by the European Commission

The DSA will not change anything unless it is reliably enforced. Learning the lessons from the problems seen with the General Data Protection Regulation (GDPR) - which relies largely on Ireland to oversee tech giants - the DSA empowers the European Commission to oversee all big online platforms for their due diligence requirements.

The Bad

Last minute watering down of the restrictions on surveillance ads

In a major capitulation to industry lobbying, the limits on ad targeting do not apply to ad tech giant Google’s hugely profitable system of display advertising or news websites. It only covers online platforms which share user content (such as Facebook, Instagram or YouTube) but crucially doesn’t include sites which host self-generated content. New language was also inserted at the last minute narrowing the scope to “profiling” using sensitive data - offering potential wiggle room for tech companies not to meaningfully change.

Users not protected from deceptive design practices (or ‘dark patterns’)

In a wholesale gutting of any protection of users from manipulative design techniques (e.g. to coerce them into certain choices as seen with cookie banners), the provision was made entirely redundant following last minute exemptions in the text.

‘Trade secrets’ exemption as a major loophole

Tech companies will be able to invoke ‘trade secrets’ as a reason not to provide researchers with data access for public interest scrutiny. Despite strong pushback from campaigners, this exemption was maintained.

The Ugly

A bitter aftertaste

The way the EU rules were agreed exemplifies many problems of EU lawmaking. This included staggering levels of tech industry lobbying up until the very last moments, unequal access to leaked drafts to be able to engage in the process, and an incredibly opaque closed door “trilogue” process to agree the final rules. Under huge pressure to close the file under the French EU Council Presidency, the last political trilogue lasted for nearly 16 hours until 2am. As highlighted by CSOs, it would take another 53 days for the public to see the actual result. For an insight into the disproportionate access of Big Tech to senior officials, see also our @EUTechWatch Twitter bot.

While this mixed picture of what was ultimately achieved may dampen enthusiasm for the final result of the Digital Services Act, it is important not to lose sight of what these rules mean at a global level. EU legislators have ventured where others - notably the US - have so far failed to force meaningful oversight. While much will depend on how these rules are enforced, both by the Commission and at national level, the foundations set by the DSA are promising and together with others, we at Global Witness will be watching closely.